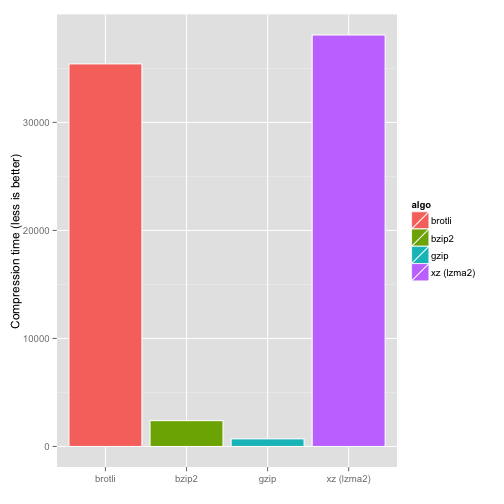

Or if you want you can do it separately with a progress bar like this. Highest compression ratio is usually attained with slower and more computing intensive algorithms, i.e. cpu speed, number of cpu cores/threads and memory. On the newer versions of tar you can also do the following too XZ_OPT="-9e -threads=8" tar cJf directory Part of the backup and restoration process is compression and decompression speeds which essentially comes down to the type of compression algorithms and tools you use and your system resources you have available i.e. Arch uses zstd -c -T0 -ultra -20 -, the size of all compressed packages combined increased by 0.8 (compared to xz), the decompression speed is 14 times faster, decompression memory increased by 50 MiB when using multiple threads. Let’s have a directory with a lot of files and subdirectories, to compress it all we would do tar -cf - directory/ | xz -9e -threads=8 -c - > Compression speed can vary by a factor of 20 or more between the fastest and slowest levels. This should give you a newer version of XZ that supports multi threading. echo "deb debian main" | sudo tee /etc/apt//packages-matoski-com.list It seems that gzip best use case is to be used with level 4 compression if you want good compression to compression speed ratio, level 1 compression was 57.7 and level 9 was 63. The XZOPT environment variable lets you set xz options that cannot be passed via calling applications such as tar. Since the XZ utils in Debian/Ubuntu is an old version I’ve created my own backports that I can use. 196 With a recent GNU tar on bash or derived shell: XZOPT-9 tar cJf directory tar's lowercase j switch uses bzip, uppercase J switch uses xz.

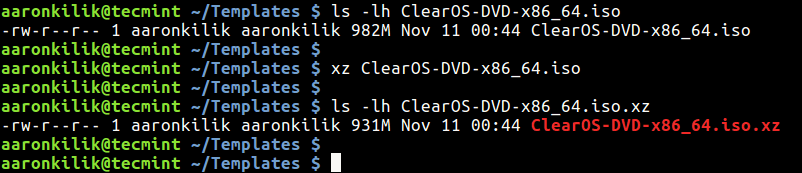

And as XZ compresses single files we are gonna have to use TAR to do itĪnd since the newer version of XZ supports multi threading, we can speed up compression quite a bit And because this file format is newer than most compressed file formats, most operating. I’ve needed to compress several files using XZ. The XZ file decompresses faster than BZIP2 but still slower than GZIP. Some algorithms and implementations commonly used today are zlib, lz4, and xz. I’ve had an 16 GB SQL dump, and it managed to compress it down to 263 MB. Use tar as is and live with the fact that th happens and select a not so high compression for gzip/ bzip2/ xz so that they will not try too hard to compress the stream, thereby not wasting time on trying to get another 0.5% compression which is not going to happen.XZ is one of the best compression tools I’ve seen, it’s compressed files so big to a fraction of their size.And there is no straight extraction from the tar file as some files will come out compressed that might not need decompression (as the already were compressed before backup). the python tarfile and bzip2 modules This also has the disadvantages of point 1. Most popular is lzma (xz) and deflate (gzip) but I know some were using lz4 or lzo but the latter in special applications (embedded, or requiring speed or low memory overhead) I have an 'ulterior motive' in this question - there is relatively new compression (zstandard) I mean that some claim is popular. The easiest way to enable GZIP compression on your WordPress site is by using a caching or performance optimization plugin. By default it takes the value infer, which infers the compression mode from the destination path provided. you compress the files, if appropriate before putting them in the tar file. Pandas’ tocsv function supports a parameter compression.

#Speed up xz compression zip#

The zip format is an example that would work. tar which allows you to compress on an individual basis, but this is unfavourable if you have lots of small files in one directory that have similar patterns (as they get compressed individually).

0 kommentar(er)

0 kommentar(er)